Improving user engagement and conversion rates on a website depends critically on A/B testing there. It’s a way to evaluate two webpage variants to find which one works better. This article will walk you through the processes to execute A/B testing successfully, so guaranteeing that you base your decisions on data to improve the performance of your website.

Step 1: Create Your Hypothesis

- Find the change: On your page, decide what you wish to modify and the reasons behind it. Users might not be clicking a button on the main page, for example.

- Goals of the Change: Try to see how tweaks in color or size could affect user behavior.

Step 2: Choose the test URL

- Decide which page is appropriate. The page where you intend to perform the test should show enough traffic over a two-week period or another suitable length.

Step 3: Define Your Goal

- Target Page: Choose a page, say a “Thank You” page, that the modification will influence.

- Site Activity: On the other hand, the objective can be a particular site event—a button click.

Setting Up A/B Testing in Plerdy

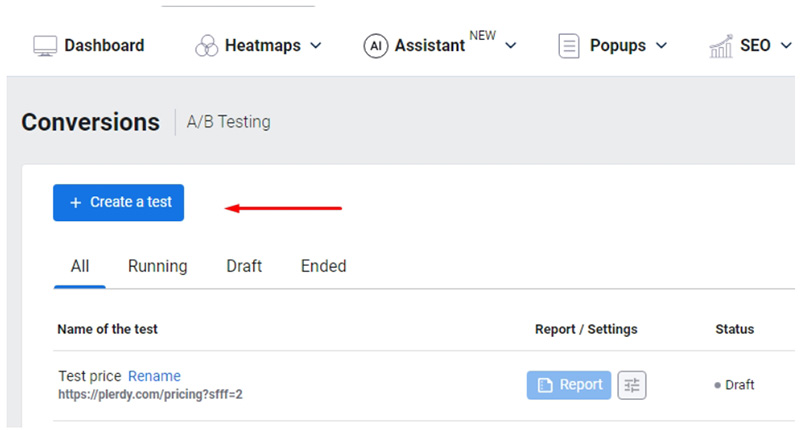

- Access A/B Testing: If you have a Plerdy account, click the blue “Create a test” button after visiting Conversions > A/B Testing.

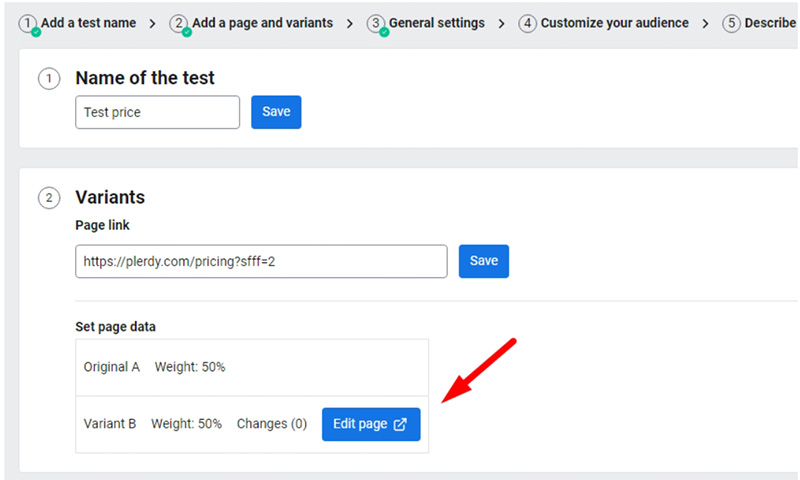

- Test Name: Enter a name for your test.

- Test URL: Add the URL where the test will run.

- Tracking Code: Go to “Settings,” copy the main and extra tracking codes, and add them to the necessary pages including the goal page should the “A/B testing script” not yet have additions. Clear the site cache then confirm the tracking code installation.

Test Variants and Settings

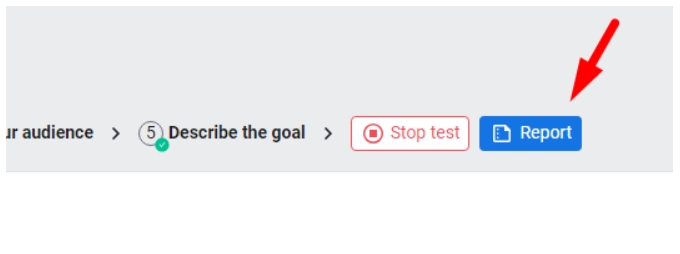

- End Date: Set when you plan to end the test. Alternatively, manually stop the test after 2-3 weeks if enough data is collected.

- Audience: Add rules if necessary, including countries and devices.

- Goals: Add the exact URL with https:// or part of the URL for tracking. Usually, this is the final page affected by Variant B.

- Events: Add a goal as an event, following instructions for class or ID. Note that event data aligns with the page view limit for heatmaps. Additional limits can be purchased if needed.

- Sending Events to GA4: Select the checkbox to send event IDs to GA4.

- Description: It’s advisable to add a note about what you changed and the test’s goal, to remember in 2-3 weeks.

Editing Variant B

- Editing Page: Upon opening the Variant B editing panel, select the checkboxes for “Select element” and “Interactive Mode.”

- Make Changes: Apply 1-3 changes to an element such as color, size, or hiding it. You can also edit HTML content like links or images.

- Save Changes: Save individual element changes, and use the “Save all changes” button to save all test modifications.

Launching the Test

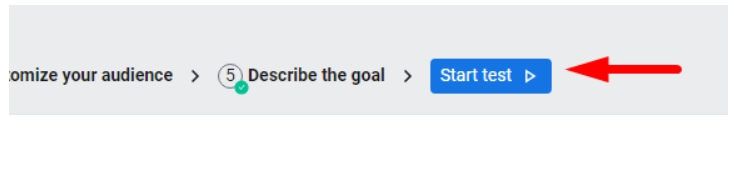

- Review Settings: Open the test settings page in a new tab and refresh.

- Start the Test: Click the “Start test” button. You can also view all changes made to the website page by selecting “Show all changes.”

Analyzing A/B Testing Results

- Session Distribution: Every variant gets almost 50/50% user sessions.

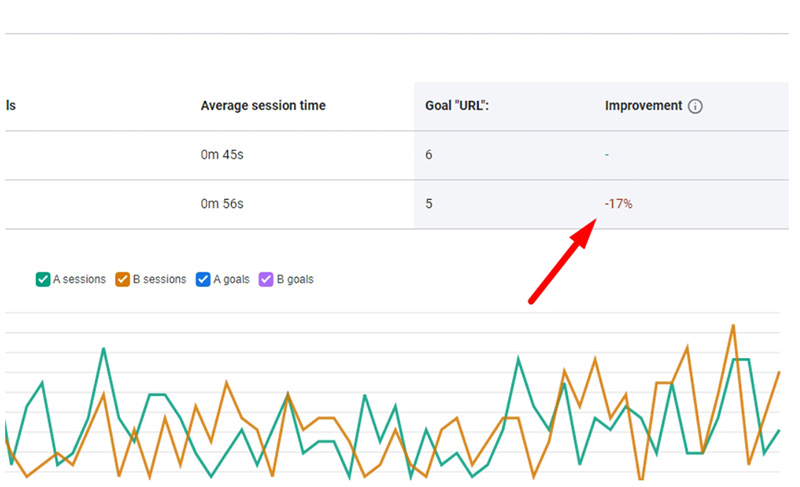

- Key Metrics: Search the Total Sessions tab’s “Improvement” column for the winning version.

- Device Analysis: Review which device most affected the winning variant.

- Traffic Analysis: Find out which traffic channel worked best for you.

Interpreting Negative Values for Variant B

Should Variant B exhibit a negative value in the improvement metric, Variant A might outperform Variant B. Here, you have two choices:

- Wait a Few More Days: Sometimes low traffic or initial user behavior modification could cause first results to not show the actual performance. A few more days of waiting will supply more information necessary for a certain conclusion.

- Take Variant B into account. Unsuccessful: One could be safe to assume that Variant B is underperforming relative to Variant A if the negative trend keeps regularly throughout a notable duration. Under such circumstances, it is advisable to examine the aspects of Variant B that might be generating the performance drop and take into account either changing or eliminating the modifications done in this variant.

Tips for Checking Variant B

- Multiple Browsers: Check Variant B using many browsers since you cannot see both variations in the same browser session in half an hour.

FAQ: How to Run A/B Testing on a Website

1. What is A/B testing, and why is it important for a website?

A/B testing is a method of comparing two webpage variants to determine which performs better in terms of user engagement or conversions. It helps optimize website elements based on real user data, improving site performance and business outcomes.

2. How do I set up an A/B test using Plerdy?

To start an A/B test in Plerdy, go to Conversions > A/B Testing, click “Create a test”, and enter the test name and URL. Then, install the tracking code, define goals, set test variants, and configure audience rules. Finally, launch the test and analyze the results.

3. How should I determine the right test variant changes?

Variant B should have 1-3 modifications, such as color, size, or content adjustments. Small but impactful changes provide clearer insights into user behavior differences without overwhelming the test.

4. What metrics should I track to analyze A/B test results?

Key performance indicators include session distribution (50/50 split), improvement percentage, traffic sources, and device-specific performance. Checking these helps determine the winning variant and guides future optimizations.

5. What should I do if Variant B performs worse than Variant A?

If Variant B shows negative improvement, wait a few more days to collect sufficient data. If the trend remains negative, analyze what changes might have caused the drop and consider modifying or reverting them.

Final Thought

One effective instrument for improving a website is A/B testing. These guidelines will help you to make wise judgments grounded on user preferences and behavior. Recall that effective A/B testing depends on ongoing learning and adaptation. delighted testing.