Heatmaps are one of the fastest ways to see what your visitors are actually doing on a page. Not what you hoped they’d do. Not what your stakeholders believe they do. What they do.

But heatmaps are also easy to misuse. Teams stare at red spots, make a “quick fix,” ship it, and then wonder why conversions didn’t move. Here’s the catch: a heatmap is a diagnostic tool, not a magic button. It shows where it hurts. It doesn’t automatically tell you what to change.

This guide is written for business owners, marketers, UX designers, and ecommerce teams who want practical outcomes: less wasted traffic, fewer UX leaks, and more conversions. You’ll get clear workflows, decision rules, and real-world caveats—because yes, it depends. And that’s not a cop-out. It’s how good CRO work actually looks.

Screenshot idea: A homepage click heatmap where the biggest hot spot is a non-clickable hero image, while the primary CTA is cold.

What Website Heatmaps Are And What They’re Not

A website heatmap is a visual layer on top of a page that shows aggregated interaction data. Instead of reading rows of events, you see patterns: where people click, how far they scroll, where they move their cursor, where they hesitate, and where frustration shows up (like dead clicks or rage clicks).

Think of it like this:

- Analytics tells you what happened (bounce rate, conversion rate, exits).

- Heatmaps tell you where it happened (which elements are pulling attention or creating confusion).

- Session replay tells you why it happened (the story of a real user’s journey).

Micro-contrast that matters: This is not a PDF. It’s shipped changes + validation. A heatmap study that ends as “interesting” is just entertainment. A heatmap study that ends as “we shipped two fixes and rechecked impact” is CRO.

Practical operator phrase: If approvals take a week, the sprint slows down. Heatmaps are fast, but your process can still be slow.

Screenshot idea: A scroll heatmap on a long landing page showing a sharp drop before testimonials and before the pricing section.

Types Of Heatmaps You’ll Actually Use

Most teams don’t need ten exotic map types. They need a small set used consistently, with segmentation and follow-up validation. Here are the types that show up in real CRO work.

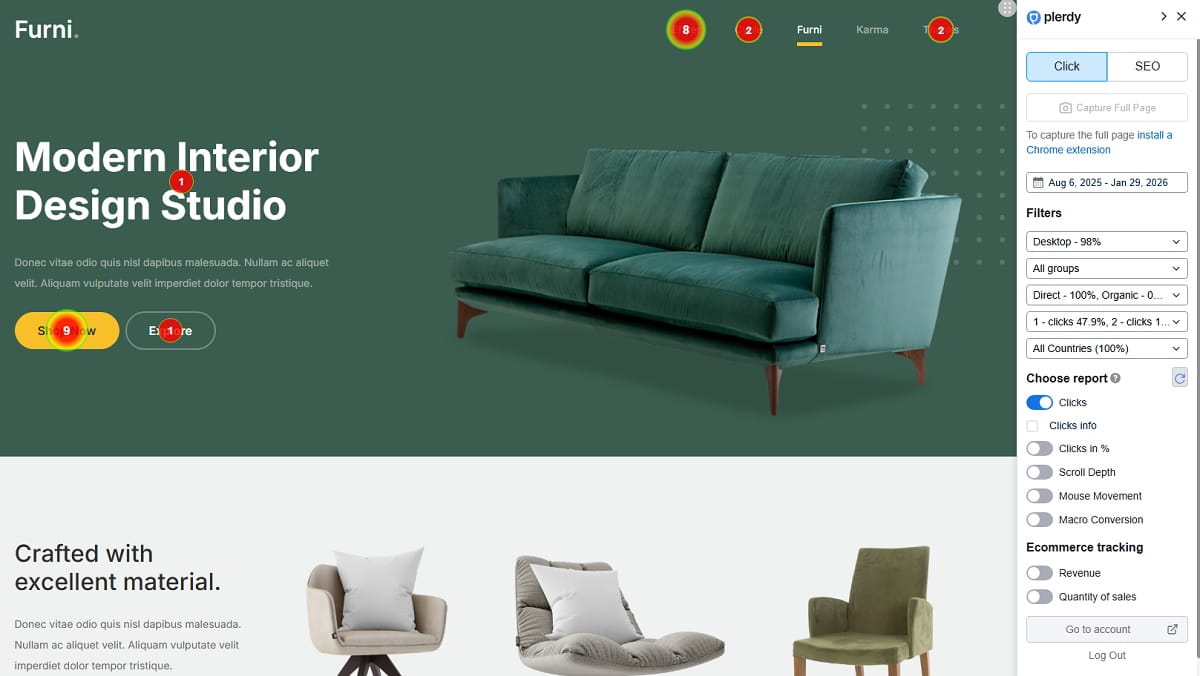

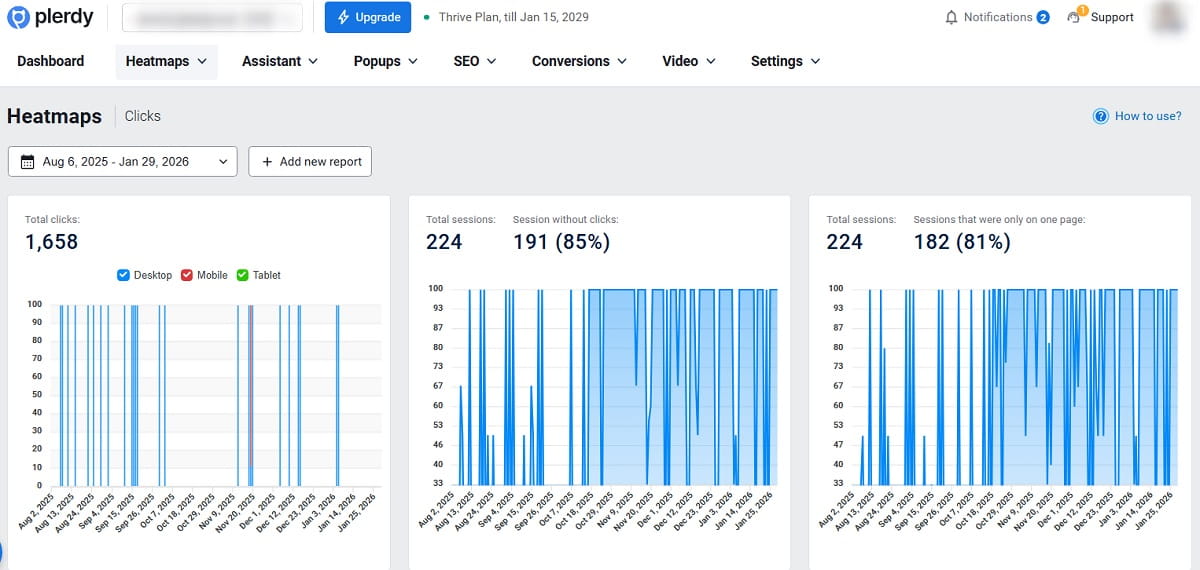

Click Heatmaps

A click heatmap shows where users click or tap. It’s the quickest way to find mismatches between what looks clickable and what is clickable.

- Dead clicks: clicks on elements that do nothing. Often caused by misleading design, broken links, unclickable images that look interactive, or a UI that trains people to click the wrong thing.

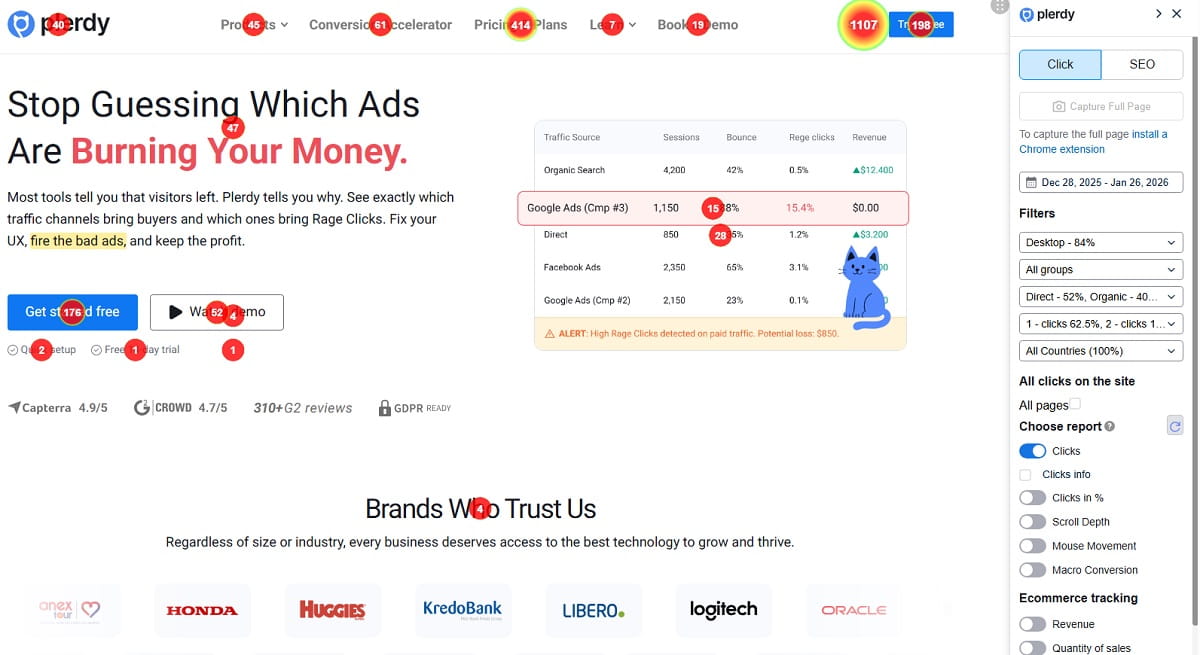

- Rage clicks: repeated rapid clicks in the same area. Often a frustration signal: lag, broken interactions, confusing UI, or a modal that won’t close.

Practical operator phrase: We test the highest-traffic step first so results show up faster. Click heatmaps are best on pages that already get meaningful traffic.

Screenshot idea: Click heatmap on a product page showing dead clicks on a non-clickable product image.

Scroll Heatmaps

A scroll heatmap shows how far down the page visitors go. It answers: “Did they even see it?”

One warning that saves teams: scroll ≠ read. A visitor can scroll fast, skip half the content, and still reach the bottom. Use scroll maps for visibility, then pair with other signals to infer comprehension.

Screenshot idea: Scroll heatmap on a pricing page showing most users never reach the plan comparison table.

Move Or Hover Heatmaps

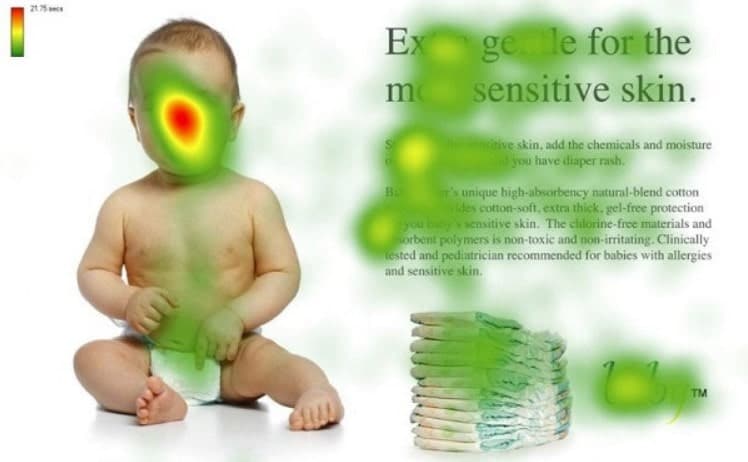

Mouse tracking (move/hover heatmaps) can hint at attention on desktop. It can also mislead you if you treat it like eye-tracking. People park their cursor, use trackpads differently, or scroll with the cursor sitting on a headline.

Decision rule: use hover heatmaps as a clue, not proof. If you need proof, use recordings and tests.

Screenshot idea: Move heatmap on a blog post showing cursor clustering around a comparison table, suggesting users are scanning, not reading paragraphs.

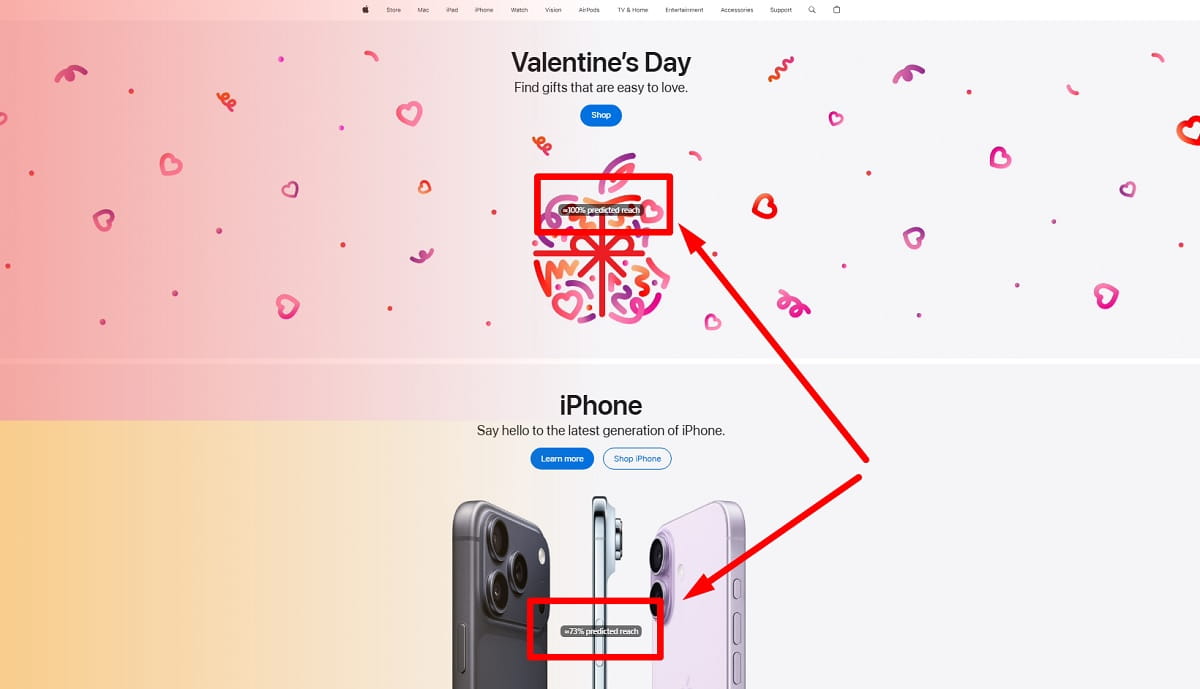

Attention Heatmaps

Some tools provide “attention” views (time on section, pauses, or engagement signals). Useful, but easy to over-trust.

Real-world friction: promo banners, chat widgets, and cookie banners can shift attention patterns. If the UI changed mid-collection, your attention map becomes a mash-up of two different pages.

Screenshot idea: Attention heatmap on a landing page showing heavy time spent on a confusing FAQ accordion and little time on the CTA area.

Zone, Element, Or Area Heatmaps

Instead of free-form “hot spots,” zone heatmaps aggregate interaction by element or section (hero, nav, product gallery, reviews, footer). This is great for stakeholder conversations because it’s easier to compare sections without arguing about pixels.

Practical operator phrase: Most wins come from pricing, checkout, PDPs, and lead forms. Zone maps help you prioritize those areas quickly.

Screenshot idea: Zone heatmap showing the footer getting more clicks than the main navigation, indicating users are “lost” and scanning for trust or policies.

What Heatmaps Are Good For In CRO And UX

Heatmaps are best when you use them to reduce uncertainty and prioritize fixes. They’re not just “nice visuals.” They’re a way to stop guessing.

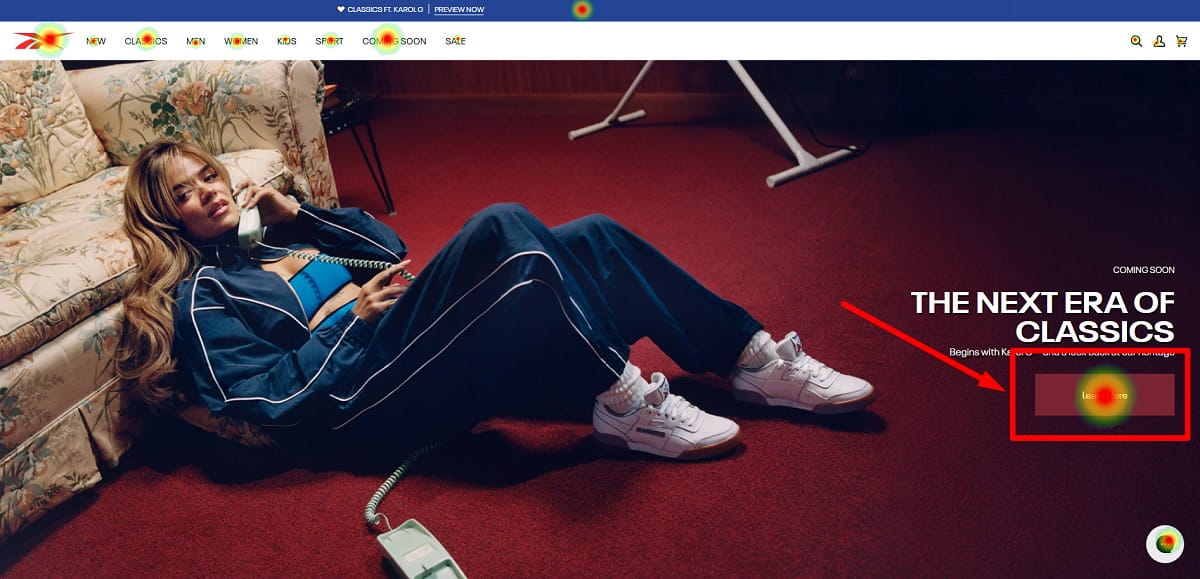

Finding UX Leaks Before You Spend More On Traffic

If you’re buying traffic or pushing content, heatmaps help you spot leaks that turn visits into waste: missed CTAs, confusing nav, and dead clicks on decorative elements. You don’t want to scale ads into a page that can’t convert.

Practical operator phrase: If stakeholders keep editing the page mid-study, your data won’t line up. Freeze key page elements during collection whenever possible.

Prioritizing Changes Without Internal Debate

Heatmaps end “I think” discussions. When you show that users are tapping the wrong element 200 times in a day, it becomes less political. The team stops arguing about taste and starts talking about outcomes.

Micro-story: We once had a pricing page where everyone in the room loved the “clean minimal layout.” The click heatmap showed users hammering a non-clickable “Most Popular” badge like it was the plan selector. We made the badge clickable and clarified the selection state. It wasn’t a redesign. It was a small fix that removed confusion.

Validating UX Hypotheses For A/B Tests

A heatmap can suggest a hypothesis: “Users don’t see the CTA.” But the test proves what fixes it.

Micro-contrast: A heatmap shows where it hurts. A test proves what fixes it.

Diagnosing Funnel Drop-Offs With Page Context

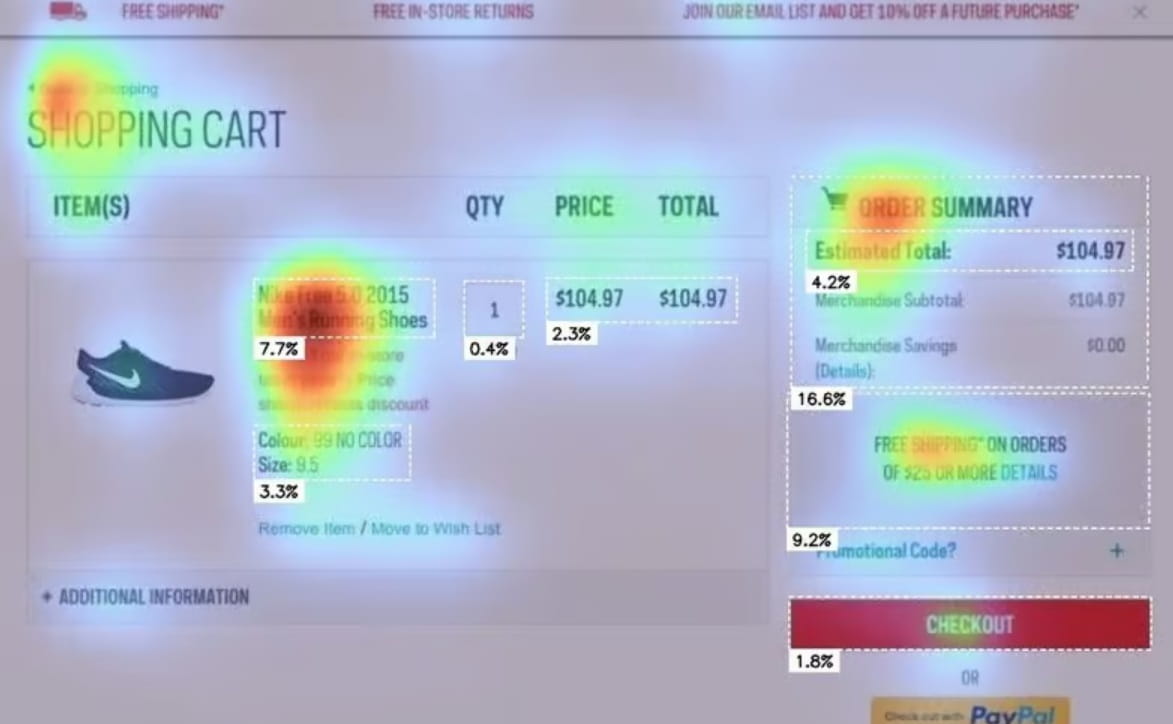

Analytics might show drop-off between “Add to cart” and “Checkout.” Heatmaps help you see what’s happening on those pages: are users clicking shipping info, stuck on coupon fields, or rage-clicking the payment selector?

Screenshot idea: Checkout click heatmap showing a cold “Place Order” button and hot clicks on “Shipping” labels, suggesting form confusion.

How To Read Heatmaps Without Fooling Yourself

Interpreting heatmaps is part pattern recognition, part discipline. The discipline is what most teams skip.

Start With A Single Question

If you open a heatmap without a question, you’ll find “interesting stuff” forever. Pick one:

- Are users finding the primary CTA?

- Are they trying to click non-clickable elements?

- Are they reaching the content that justifies the offer?

- Are they getting stuck in forms?

Practical operator phrase: If you don’t define the question, every red spot looks like a priority.

Read It In Layers: Visibility → Interaction → Intent

- Visibility: scroll heatmap. Did they reach the section at all?

- Interaction: click heatmap. What did they try to do?

- Intent: recordings + metrics. Why did they do it, and did it lead to conversion?

Real-world friction: traffic seasonality can skew patterns. Your January heatmap might look different than your “holiday promo” heatmap. If the intent changes, the map changes.

Watch For The Classic Pitfalls

- Scroll ≠ read: pair with time on page, recordings, or attention signals.

- Hover ≠ intent: cursor behavior varies wildly by device and user.

- Clicks on non-clickable elements: often a design affordance problem, not “user stupidity.”

- Banner blindness: users learn to ignore anything that looks like an ad, even if it’s important.

- Novelty effect after changes: early behavior after a redesign can be weird. Let it stabilize before declaring victory.

Practical operator phrase: One heatmap can tell the truth and still lead you to the wrong decision. That’s why you validate with segmentation and outcomes.

Use A Diagnostic Table Instead Of Guessing

| Heatmap Pattern | Likely Cause | What To Test Next |

|---|---|---|

| Hot clicks on a non-clickable image or headline | False affordance; users expect it to open details | Make it clickable; add a clear “View Details” link; improve visual cueing |

| Primary CTA is cold while secondary links are hot | CTA is buried, low contrast, unclear value, or competing distractions | Clarify CTA copy; reduce competing links; move CTA higher; test button style and message |

| Heavy activity on FAQs, shipping, returns, or trust elements | Uncertainty or lack of clarity in offer details | Bring key reassurance above the fold; simplify policies; add concise trust blocks near CTA |

| Scroll drop-off before key proof (reviews, pricing, features) | Weak first screen; slow load; content feels irrelevant | Tighten hero; add value proof earlier; improve speed; test shorter page vs better structure |

| Rage clicks around tabs, filters, or payment options | Broken UI, lag, confusing interactions, or poor tap targets | Fix interaction bugs; increase tap area; improve loading states; simplify choices |

| Many clicks on “coupon” field or promo elements in checkout | Users hunting for discounts; friction causing abandonment | Test hiding coupon behind link; add messaging about best price; simplify checkout steps |

Screenshot idea: Category page click heatmap showing users clicking product images but not the “Quick Add” button, suggesting the button is visually weak or mistrusted.

Segmentation Is The Difference Between Insight And Noise

If you do one thing after reading this guide, do this: Segment → Compare. Heatmaps without segmentation often tell a half-truth.

Practical operator phrase: Most teams read the “average visitor.” Your revenue comes from specific segments.

Core Segments To Compare

- Mobile vs desktop: tap behavior changes everything. Hover data can be meaningless on mobile.

- New vs returning: returning users navigate faster; new users need reassurance and clarity.

- Paid vs organic: intent differs. Paid visitors may be colder or more impatient, depending on the ad.

- Geography or language: message clarity and trust expectations shift.

- Device type: iPhone vs Android sometimes behaves differently on sticky elements and overlays.

Real-world friction: stakeholders love launching new banners. But if a banner went live halfway through collection, segment by date range or restart the study. Otherwise you’re comparing two different pages and calling it “user behavior.”

How Segmentation Changes The Story

Example: a desktop click heatmap shows strong engagement with the pricing table, but mobile shows most taps on the hamburger menu and almost none on plan details. That’s not “mobile users don’t care.” That’s a layout or readability issue. The fix might be as simple as making plan info scannable, increasing tap targets, and moving proof higher.

Screenshot idea: Mobile click heatmap on a pricing page showing taps on the menu and back button area, while the plan cards get very few taps.

Heatmap Use Cases By Site Type And Page Type

Heatmaps work across ecommerce, SaaS, and content sites, but the questions change. Below are practical examples you can steal. Not theory. Page-by-page reality.

Ecommerce Use Cases

Homepage: Are users finding categories or getting stuck in the hero? If your homepage is a “brand story,” check whether visitors are actually moving into shopping paths.

Example: click heatmap shows users click the hero image more than the category tiles. Fix: make the hero link to the right category, or stop teasing and give a clear CTA.

Category Page (PLP): Are filters used? Are users clicking product images but missing “Quick Add”? If filters are cold, they may be hidden, confusing, or not valuable.

Real-world friction: filter UI changes during promos (holiday collections, limited stock) can shift behavior. Don’t compare apples to pumpkins.

Product Page (PDP): Do users reach reviews, shipping info, and size guides? Are there dead clicks on gallery images or thumbnails? If the size guide is hot, it might be too late on the page or unclear.

Cart: Are users clicking “Continue shopping” like it’s an exit? Are they stuck on shipping estimator fields? Does the cart feel like a decision point or a confusion point?

Checkout: Where do rage clicks happen? Payment method? Address fields? Coupon? If a field label gets heavy clicks, it might not be obvious what’s required.

Screenshot idea: Click heatmap on a checkout page showing repeated clicks on the “i” icon next to shipping, indicating uncertainty.

SaaS Use Cases

Pricing Page: Are users interacting with plan toggles, feature comparisons, or FAQs? If the toggle is hot but conversions aren’t moving, the issue may be unclear value per plan, not the UI.

Landing Page: Does the CTA get clicks? Do users scroll to proof? If testimonials are cold, they may be generic or placed too late.

Signup Flow: Are users dropping at password rules, SSO buttons, or verification steps? Heatmaps help you see if users are trying to click “Continue” before completing required fields.

Micro-story: On a SaaS signup form, we saw clicks clustering on the password field and a lot of rage clicks on “Create account.” Recordings showed users failing hidden password requirements. Fix was boring: show requirements clearly, use inline validation, and reduce surprise. Conversions moved because confusion dropped.

Screenshot idea: Lead form click heatmap showing users clicking the field label, not the input, suggesting tap targets are too small.

Content Site Use Cases

Blog Post: Do users reach the key section? Where do they drop? Are they clicking table-of-contents links? If your TOC is cold, it might not be visible or helpful.

Comparison Articles: Are readers engaging with tables, anchors, and “jump to” links? If they’re hovering and clicking on headings, they’re scanning. Make scanning easier instead of fighting it.

Lead Magnet Pages: Are users clicking on the image, the headline, the form, or the privacy text? If they click “privacy policy” like it’s the main event, trust might be weak.

Screenshot idea: Scroll heatmap on a long blog post showing that most users only reach the first 25% and never see the lead magnet CTA near the bottom.

A Step-By-Step Heatmap Study Workflow

This is the workflow teams can run repeatedly. It’s simple, but not simplistic. And yes, you must ship changes and recheck. Otherwise you’re collecting data as a hobby.

Practical operator phrase: If the page is changing weekly, shorten your study window and lock the layout.

Heatmap Study Checklist (Before You Start)

- Pick one page type: PDP, pricing, checkout, landing, or signup. Don’t start with “the whole site.”

- Write one question: what decision do you need to make after this study?

- Confirm tracking works: tag installed, no conflicts, pages loading normally.

- Freeze major layout changes: no redesigns, no surprise banner experiments mid-collection.

- Define segments: at minimum mobile vs desktop; ideally new vs returning; paid vs organic.

- Decide success metrics: conversion rate, add-to-cart rate, form completion, CTA CTR.

Real-world friction: setup is rarely “5 minutes.” Someone needs access to the site, someone needs to approve scripts, and someone will ask if it slows the page. Plan for that, or you’ll never start.

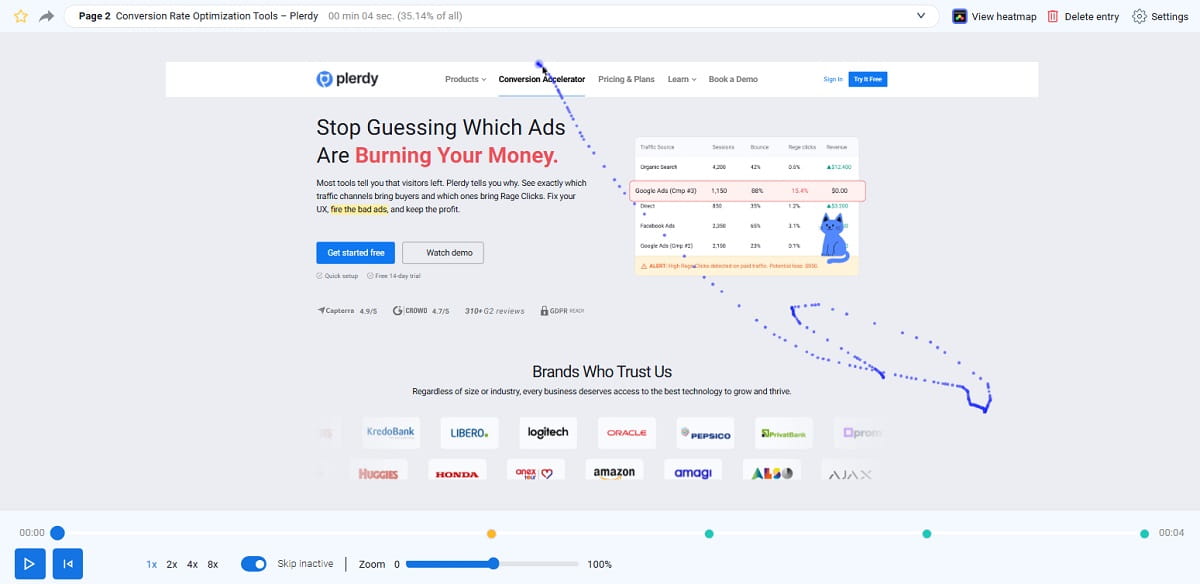

Step-By-Step Workflow (Setup → Collect → Analyze → Ship → Retest)

- Choose the highest-impact page. Start where money is made or lost: checkout, PDP, pricing, lead form. If traffic is low, pick the page with the clearest outcome and combine with longer collection time.

- Install and validate tracking. Confirm the tool loads, events appear, and page elements are recognized. Check both desktop and mobile. Make sure cookie/consent behavior matches your policy.

- Collect until patterns stabilize. Don’t rush based on a handful of visits. You want enough data that the map stops flipping its “hot spots” day to day. Sanity-check by comparing two time slices: do you see the same story or two different stories?

- Segment → Compare. Mobile vs desktop first. Then new vs returning. Then traffic sources. This step is non-negotiable.

- List the top 5 friction points. Dead clicks, missed CTAs, scroll drop-off, rage clicks, confusion around filters/forms. Keep it tight. If you list 20 issues, you won’t ship any.

- Validate with recordings and metrics. Watch session replay around the problem zones. Check if the behavior correlates with drop-offs, low CTR, or abandonment.

- Ship the smallest meaningful fix. Don’t redesign the page because a heatmap looks ugly. Fix the specific confusion: make the element clickable, clarify copy, move proof earlier, simplify the form.

- Retest and compare. Re-run the heatmap after changes. Compare click distribution, scroll reach, and the KPI you chose. Watch out for novelty effects in the first days.

Micro-contrast: This isn’t “collect data.” It’s “collect, decide, ship, validate.” That’s the loop.

Screenshot idea: Before-and-after click heatmap on a landing page showing CTA clicks increasing after moving the CTA higher and removing competing links.

Heatmap Study Checklist (During Analysis)

- Confirm the page version: same layout, same key blocks, same offers during collection.

- Check for blockers: cookie banners, modals, chat widgets hiding CTAs on mobile.

- Look for false affordances: images, badges, headings that look clickable.

- Look for “cold” essentials: primary CTA, plan selector, add-to-cart, form submit.

- Pair with KPIs: don’t optimize clicks if conversions are the goal.

- Document assumptions: what you believe, what the map suggests, what you’ll test.

Real-world friction: people will ask for a “quick screenshot for Slack” and then treat it like final truth. Save yourself: write the question and the segment right above the screenshot in your notes. Otherwise the team debates the wrong thing for a week.

KPIs And Metrics To Pair With Heatmaps

Heatmaps show interaction data. But CRO lives and dies by outcomes. Pair them, or you’ll optimize for the wrong behavior.

Core Metrics That Play Well With Heatmaps

- Conversion rate: the main outcome metric for ecommerce orders, lead submissions, signups.

- CTA click-through rate (CTR): are users clicking the primary next step?

- Scroll depth: what percentage reaches key blocks (pricing, reviews, form, FAQ)?

- Bounce rate and exits: where do users leave the flow?

- Form completion rate: where do they abandon a form?

- Add-to-cart rate: for PDPs and PLPs, does engagement lead to cart?

- Error rates: validation errors, payment errors, “invalid address” issues.

Practical operator phrase: If the KPI doesn’t move, the fix wasn’t a fix. It may still be good UX, but call it what it is.

Simple Pairing Examples (So You Don’t Overthink It)

- PDP click heatmap + add-to-cart rate: dead clicks on gallery + low add-to-cart often signals unclear CTA hierarchy or missing info.

- Pricing click heatmap + signup rate: heavy clicks on FAQ + low signup can signal uncertainty; bring proof and clarity earlier.

- Checkout click heatmap + abandonment rate: rage clicks on payment + drop-off can signal UI bugs or trust issues.

- Landing scroll heatmap + CTA CTR: if users don’t reach proof, the top section isn’t earning the scroll.

Screenshot idea: Heatmap + analytics overlay concept: scroll drop-off aligns with the moment the page shifts to dense text and loses scannability.

Common Heatmap Mistakes And How To Avoid Wrong Conclusions

Heatmaps are persuasive. That’s the danger. They look like truth. But without context, they can push you into confident mistakes.

Mistake: Treating A Heatmap Like A Verdict

A red spot doesn’t mean “change this.” It means “investigate this.” Use recordings to confirm the behavior and analytics to confirm impact.

Practical operator phrase: If you can’t explain the behavior in one sentence, don’t ship a big change.

Mistake: Ignoring Page Context (Especially Overlays)

Cookie banners, shipping promos, sticky headers, chat widgets—these can shift clicks and reduce visibility. On mobile, a sticky bottom bar can cover important buttons and make the heatmap look “dead.”

Real-world friction: marketing adds a pop-up mid-week. Suddenly your scroll depth drops and everyone blames content. It’s the pop-up. Always check what changed.

Mistake: Over-Reading Hover Data

Hover heatmaps can be useful on desktop, but they’re not eye tracking. Treat them as directional.

Decision rule: if the decision is high-stakes (pricing, checkout, signup), don’t base it on hover alone. Validate with recordings and tests.

Mistake: “They Didn’t Scroll, So They Don’t Care”

Sometimes they don’t scroll because the first screen is doing its job. Sometimes they don’t scroll because it’s unclear what’s next. Sometimes they don’t scroll because the page loads slow and they bounce.

Pair scroll heatmaps with load performance and bounce/exits. Otherwise you’re blaming content for technical problems.

Mistake: Mixing Traffic Types And Calling It A Single Story

A landing page can behave completely differently for brand traffic vs paid traffic. Segment it. If you don’t, your average heatmap will hide the truth.

Practical operator phrase: If you merge “cold” and “warm” visitors, you’ll build a page that pleases nobody.

Mistake: Shipping Too Many Changes At Once

If you redesign the entire page after a heatmap review, you won’t know what caused the improvement (or the drop). Ship smaller changes that map to a clear hypothesis.

Real-world friction: stakeholders love “big redesign energy.” Your job is to protect learning. Ship the smallest change that fixes the leak.

Heatmaps Vs Session Recordings Vs Analytics Vs A/B Tests

If you’re choosing the wrong tool for the question, you’ll waste time. Here’s a practical decision map you can use in real work.

Decision Map: If Your Question Is X, Use Y

- “Where are people clicking or tapping?” → Use click heatmaps.

- “Do users see the section at all?” → Use scroll heatmaps and scroll depth.

- “Why are they stuck or confused?” → Use session replay.

- “Which step loses people in the funnel?” → Use analytics funnels plus heatmaps on the leaking steps.

- “Will this change increase conversions?” → Use an A/B test (or controlled rollout) with a defined KPI.

- “Is this an accessibility issue?” → Use UX audit checks (contrast, tap targets, readability) plus recordings and feedback.

Micro-contrast: Heatmaps are fast to learn from. Tests are slower but definitive. Analytics is broad but abstract. Recordings are detailed but time-consuming. Strong teams combine them instead of arguing which one is “best.”

Screenshot idea: A single UX issue shown through four lenses: analytics shows drop-off, heatmap shows dead clicks, replay shows confusion, test confirms fix.

When Heatmaps Are The Wrong First Step

- Very low traffic pages: heatmaps can be too noisy. Start with qualitative feedback, usability testing, or broader analytics.

- Highly dynamic pages: if content changes per user heavily, segment aggressively or focus on recordings and event analytics.

- When you already know the bug: if the checkout button is broken, fix it. Don’t “study” it.

Privacy And Consent Basics

This is not legal advice, but you do need a basic approach so heatmap programs don’t become a risk. Heatmaps and session replay tools often involve cookies or similar tracking and can collect interaction data that may be considered personal data depending on your setup and jurisdiction.

Practical Rules That Keep You Out Of Trouble

- Be transparent: disclose what you collect and why in your privacy policy and cookie notice.

- Respect consent settings: if your site requires opt-in for analytics or tracking, your heatmap tool should follow that logic.

- Mask sensitive inputs: avoid capturing form fields that contain personal or financial data. Use masking and exclusions where available.

- Limit access internally: not everyone needs replay access. Keep permissions tight.

- Don’t over-collect: track the pages you need, for the time you need, then move on.

Real-world friction: legal and security reviews can slow setup. If you plan for it, it’s fine. If you pretend it won’t happen, the project dies in “pending approval” for a month.

Accessibility And UX Factors That Change Heatmap Patterns

Heatmaps show behavior, not intent. And behavior is shaped by usability and accessibility. If you ignore this, you’ll misread “cold” clicks as lack of interest when the issue is simply that people can’t comfortably interact with the page.

Common UX Factors That Shift Heatmaps

- Contrast and readability: if text is low contrast, users skim less and scroll differently.

- Tap targets: small buttons cause missed taps and frustration on mobile (often showing as rage clicks around the area).

- Sticky elements: headers and cookie banners can hide content, distort scroll patterns, and steal clicks.

- Load performance: slow pages increase bouncing and reduce interaction depth.

- Visual hierarchy: if everything looks equally important, users click the wrong thing.

Practical operator phrase: If mobile users mis-tap, it’s usually your tap target, not their finger.

Screenshot idea: Mobile click heatmap showing clustered taps near a tiny close icon on a promo banner.

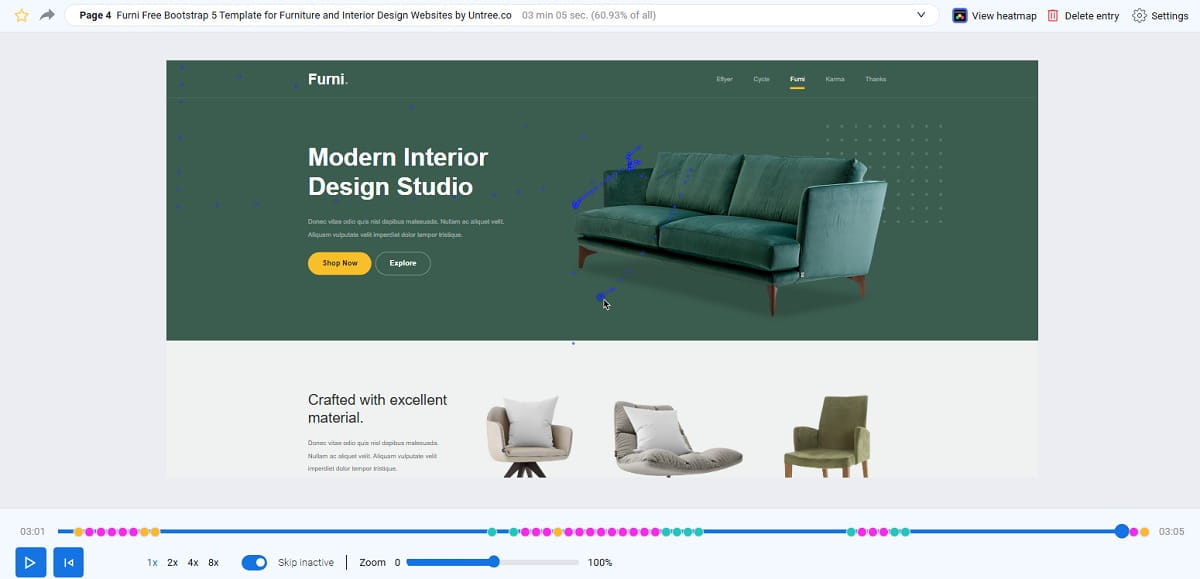

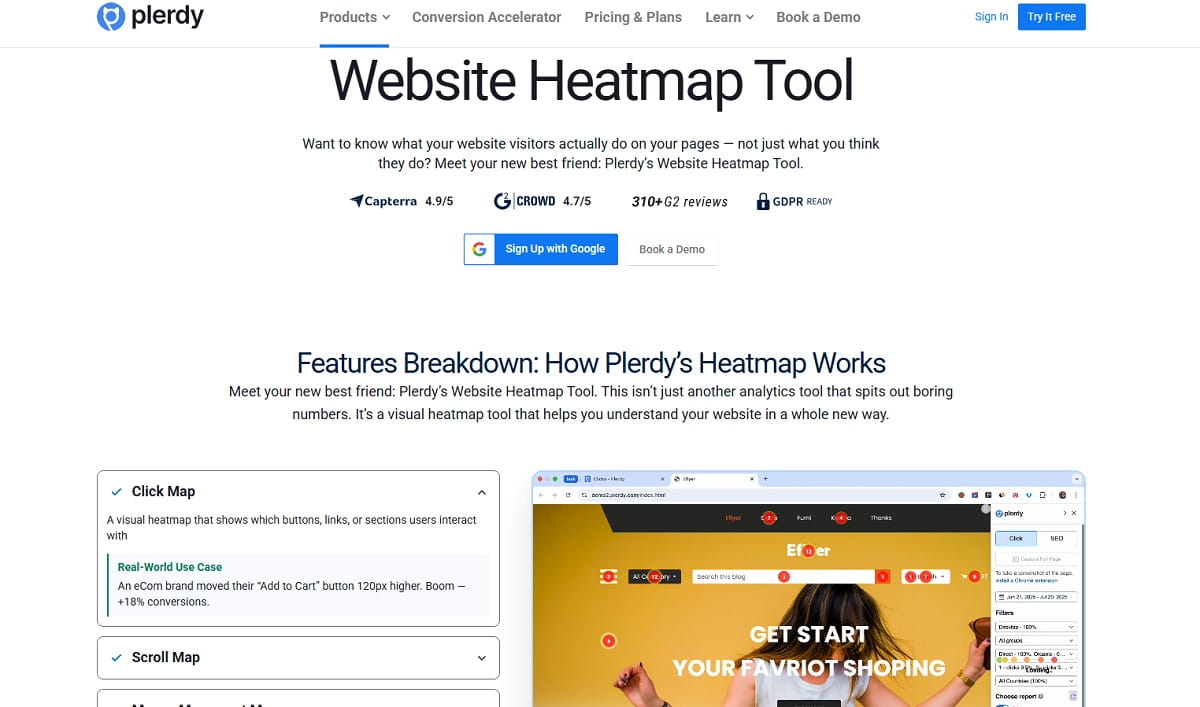

How Plerdy Fits Into A Practical Heatmap Program

Heatmaps become more valuable when you connect them to a repeatable CRO workflow: identify leaks, prioritize fixes, validate with behavior, then measure outcome.

Plerdy is built for teams who want to move from “we think the UX is fine” to “we found the leak, fixed it, and verified it.” You can use heatmaps alongside session replay and website analytics to see where users struggle, where they hesitate, and where the funnel quietly breaks.

Here’s a realistic way teams use it:

- Start with a problem page: PDP, pricing, checkout, or lead form.

- Use heatmaps to surface friction: dead clicks, missed CTAs, scroll drop-offs, confusion zones.

- Use session replay to confirm “why”: what the user expected, what blocked them, what they tried next.

- Ship small fixes and retest: verify change in interaction patterns and key KPIs.

If you want a straightforward place to begin, try a simple UX audit flow: pick your highest-traffic money page, run heatmaps by segment, then watch a handful of sessions around the biggest friction point. You’ll usually find a fix that’s smaller than you expected.

Soft CTA: If you’re tired of guessing where your conversions leak, try Plerdy on one high-impact page for one week. Don’t boil the ocean. Fix one thing, then measure it.

Practical Examples You Can Copy (Page Type Playbook)

Below are quick “if you see X, do Y” examples across common pages. This section is intentionally concrete.

Homepage Examples

- If the hero image is hot but the CTA is cold: make the hero clickable or add a clear CTA directly on the hero. Reduce competing links in the first screen.

- If users click the logo repeatedly: navigation confusion. Make “Shop” or “Products” obvious, not hidden.

Product Page (PDP) Examples

- If size guide is extremely hot: sizing uncertainty. Add fit notes, model info, clearer size selection, and reduce surprises.

- If users click on review stars but nothing happens: make star rating anchor to reviews, and show a review summary higher.

Category Page (PLP) Examples

- If filters are cold: they may be hidden, confusing, or irrelevant. Test a more visible filter UI and better defaults.

- If product images are hot but “Quick Add” is cold: improve button contrast, trust cues, and clarity of action.

Pricing Page Examples

- If users hammer the plan toggle: they’re price-sensitive or comparing. Make the difference between plans obvious and reduce ambiguity in feature lists.

- If the FAQ is hotter than the plans: uncertainty. Bring the top reassurance points closer to the plans and CTA.

Landing Page Examples

- If scroll drop-off happens before proof: your first screen isn’t earning the scroll. Tighten it and bring proof earlier.

- If users click trust badges but ignore CTA: CTA message may be weak. Test clearer value and lower perceived risk.

Blog Post Examples

- If TOC links are hot: users are scanning. Make headings more descriptive and add jump links near key sections.

- If outbound links get heavy clicks early: your intro might not be matching intent. Clarify the promise faster.

Cart And Checkout Examples

- If coupon field dominates clicks: discount hunting. Test hiding coupon behind a link, or messaging that reduces uncertainty (“best price applied”).

- If rage clicks cluster around payment: lag, validation errors, or trust concerns. Fix UI feedback and consider adding reassurance near payment options.

Screenshot idea: Cart click heatmap where “Continue Shopping” is the hottest element and “Checkout” is cold.

Next Steps Checklist

Here’s the simplest “do this next” list that works for most teams. Keep it boring. Boring ships.

- Pick one money page: PDP, pricing, checkout, or lead form.

- Write one question: what decision will you make after reviewing the heatmap?

- Install heatmaps + verify tracking: check both desktop and mobile.

- Collect until patterns stabilize: don’t react to tiny samples.

- Segment → Compare: mobile vs desktop, new vs returning, paid vs organic.

- Identify top 3 friction points: dead clicks, missed CTAs, scroll drop-off, rage clicks, form confusion.

- Validate with recordings and KPIs: confirm “why” and tie it to outcomes.

- Ship one small fix: the smallest change that removes a clear UX leak.

- Retest: rerun heatmaps and compare KPI movement.

- Repeat weekly or biweekly: momentum beats one giant “optimization project.”

Soft CTA: If you want a practical starting point, run this checklist using Plerdy on a single high-traffic page. The goal isn’t to collect more data. It’s to find one UX leak, fix it, and confirm you stopped the bleed.

FAQ

What Is A Website Heatmap In Simple Terms?

A heatmap is a visual layer that shows aggregated user interaction—where people click, how far they scroll, and where they focus or hesitate—so you can spot friction and prioritize fixes.

Are Heatmaps Better Than Google Analytics?

They’re different. Analytics tells you what happened (drop-offs, conversion rate). Heatmaps show where behavior clusters on the page. The best work uses both, plus recordings when you need the “why.”

How Long Should I Collect Heatmap Data?

Long enough that patterns stabilize and don’t flip daily. If your traffic is low, collect longer or focus on fewer key pages. Sanity-check by comparing two time slices—do you see the same story?

What Are Dead Clicks And Why Do They Matter?

Dead clicks are clicks on elements that don’t do anything. They usually signal confusion, broken links, or misleading design cues. Fixing dead clicks often removes friction fast.

Do Scroll Heatmaps Show If People Read My Content?

No. Scroll shows how far they go, not whether they read. Pair scroll heatmaps with time-on-page, recordings, or section engagement signals if you need a stronger read on comprehension.

Should I Use Hover Heatmaps For Mobile?

Hover data is mostly a desktop clue. Mobile behavior is taps, scrolls, and gestures. Focus on click/tap heatmaps, scroll heatmaps, and recordings for mobile insights.

What Pages Should I Start With?

Start with the highest-impact step: checkout, product pages, pricing, or lead forms. If you’re unsure, start where you spend the most traffic (paid landing pages) or where analytics shows the biggest leak.

Can Heatmaps Replace A/B Testing?

No. Heatmaps suggest where friction is and what to fix. A/B tests prove if a change improves conversion. Use heatmaps to generate better test ideas, then validate.

Do Heatmaps Create Privacy Risks?

They can if you collect too much or ignore consent requirements. Use masking for sensitive fields, be transparent, respect consent settings, and limit access and collection scope.

Conclusion

Website heatmaps are simple on the surface and brutally honest in practice. They show you where visitors hesitate, misclick, and get confused—so you stop guessing and start fixing the real UX leaks. But a heatmap is still a diagnostic. It points to pain; it doesn’t prove the cure.

The teams that win don’t obsess over “hot” colors. They run a repeatable loop: define one question, collect until patterns stabilize, then Segment → Compare. Mobile vs desktop. New vs returning. Paid vs organic. That’s where the story becomes clear, and that’s where prioritization gets easier.

Use heatmaps to generate sharper hypotheses, then pair them with website analytics, session replay, and the KPI that matters (conversion rate, form completion, add-to-cart, checkout completion). If the KPI doesn’t move, the change wasn’t the fix—even if the map looks prettier.